In 2023 a colleague of mine, Simon Worley, and I starting writing what we hoped would be a short guide on AI in Education. Chat GPT had exploded on to the scene and it seemed like the time was ripe to dive in as folks in the education sphere were hungrier than ever to explore the benefits and risks of this new tool. Unfortunately, we discovered that with every re-edit, every iteration, every additional chapter, it became obvious to us that anything we would release would inevitably be dated by the time it came out. And so we were left with pages and pages of effort that have sat for a year in a shared file, never having seen the light of day. So if for no other reason than to feel like our efforts can be usefully shared, I thought I’d grab a few excerpts from what we wrote and put them into a few blog posts instead. So for the handful of people that read this, please enjoy! And try not to hold it against us how likely out of date the information is by the time you read it.

Note that images included in this post have been generated by ChatGPT’s Image Generator.

Generative A.I. in Schools (Part 1)

Whether you like it or not, Artificial Intelligence is here and it’s here to stay. While this may sound or feel disturbing, be comforted in knowing that you’ve been likely using AI for the better half of the 21st century. Ok, that may not be THAT comforting, but the truth is that AI has been making our lives easier in the background for years. From the moment we wake up to the time we lay our heads down at night, AI is quietly working behind the scenes, reshaping our world and influencing how we interact with it.

The goal of this writing is to dispel some of the myths or fears you may have about AI and, with our help, learn to harness this powerful tool to create richer, more dynamic learning experiences. The technological revolution is upon us, and we are already starting to see the profound impact AI will have on education and our lives more broadly. The fact that you are reading this means that you don’t want to end up like a Luddite and are open to expanding your world and your students’ learning potential. We are on the cusp of the fourth industrial revolution, and while AI can be a scary thought, we would like to take you on a journey and help you discover new and interesting ways to incorporate AI into your classrooms.

Let’s kick this off with the story of schoolteacher Maya. Her day may seem very similar to yours and might just highlight some of the many ways AI can help, rather than hinder.

In your city in a not-too-distant future, where the digital world seamlessly intertwines with the physical, lives Maya. Maya is 31 and has been an educator since graduating teachers’ college when she was 24. Her day begins with a familiar sound – her iPhone alarm, gently rousing her from slumber. After getting up and brushing her teeth, Maya asks Siri what the weather will be like today. Siri politely replies, “Good morning, Maya. The weather outside is partly cloudy with a low of 14 degrees Celsius and a high of 25.” With a yawn and a nod, Maya asks Siri a few more questions about the latest news updates that occurred while she slept and what is on her day planner.

As she gets dressed, Maya browses through her favourite online shopping platform, Amazon. With each click and scroll, AI algorithms analyze her previous purchases, identifying her preferences and suggesting products that are likely to align with her tastes. “Here are some recommendations for you, Maya.” The app presents a curated list, displaying an array of stylish clothing, accessories, and materials for her classroom. Maya selects a few items, marveling at the accuracy with which the AI seems to understand her style.

Breakfast in hand, Maya sits down with her tablet to catch up on the latest news. Her Instagram and TikTok feeds are populated with videos and posts tailored precisely to her interests, courtesy of AI’s content curation. Yet her eyes linger instead on a headline about AI-generated deep fake videos infiltrating the media landscape. She shakes her head in disbelief, contemplating the growing challenges posed by such technology.

Maya is glad to leave behind the world of deep fakes as she heads out on her morning commute. Using her Waze GPS app, she navigates the city’s labyrinthine streets with ease. The app analyzes real-time traffic data, suggesting alternate routes to avoid congested areas. As she walks, Maya listens to The EdTech Podcast, suggested to her by an AI-driven content recommendation system.

Maya reaches work, a local high school where she teaches English, excited for the day’s lessons. The classroom is equipped with interactive whiteboards that respond to touch, creating an engaging environment for students. Maya’s teaching is also enhanced by AI-powered tools that adapt learning materials to individual student needs, ensuring that each student can excel at their own pace. She has students working with AI tutors such as Khanmigo, predictive text models like ChatGPT, and AI website builders to accomplish their tasks.

During lunch break, Maya receives an email from a colleague suggesting she try the Duolingo chatbot, a language learning app that uses AI to teach new languages. Intrigued, she downloads the app, finding its speech recognition technology impressive as it provides instant feedback on her pronunciation.

The afternoon is a flurry of activity, and Maya relies on her AI-powered to-do list to keep track of her tasks. As the day winds down, she reflects on the incredible ways AI had woven itself into her life. From planning her day to assisting her teaching, AI has become an indispensable ally.

On her way home, Maya ponders the delicate balance between the promise of AI and its potential pitfalls. The deep fake issue continued to gnaw at her thoughts. As an educator, she knows it is essential to equip her students with the critical thinking skills to distinguish truth from fiction, especially in an age where reality can be manipulated so convincingly.

Back in her apartment, Maya adjusts the smart lighting system with a voice command, setting the ambiance for an evening yoga session. She decides to end the day by reading a book… an ACTUAL book – a realm still untouched by AI-generated content. As she immerses herself in the pages, she takes some solace in the written word, appreciating the irreplaceable essence of human creativity.

In the interconnected tapestry of Maya’s day, AI is both a silent companion and a formidable force, shaping her experiences and interactions; a reminder that while AI holds tremendous potential, it is the human touch that still grounds our world in authenticity and integrity.

As you can see, Maya has interacted and engaged with AI systems during every major part of her day. We hope that you saw a little bit of yourself in that story and you can see how Artificial Intelligence is all around us; we just may not have recognized it.

Before we move on, we would like to circle back and reference the alarming detail of deep fakes that was peppered throughout the story. One of the reasons AI is getting a bad rap is because we fear the unknown and we still don’t know its true potential. Mention the name ‘Skynet’ and anyone who’s seen Terminator Two: Judgement Day will get a cold chill down their spine. Like any new technology, AI has the power to change the world, for the better, or for the worse.

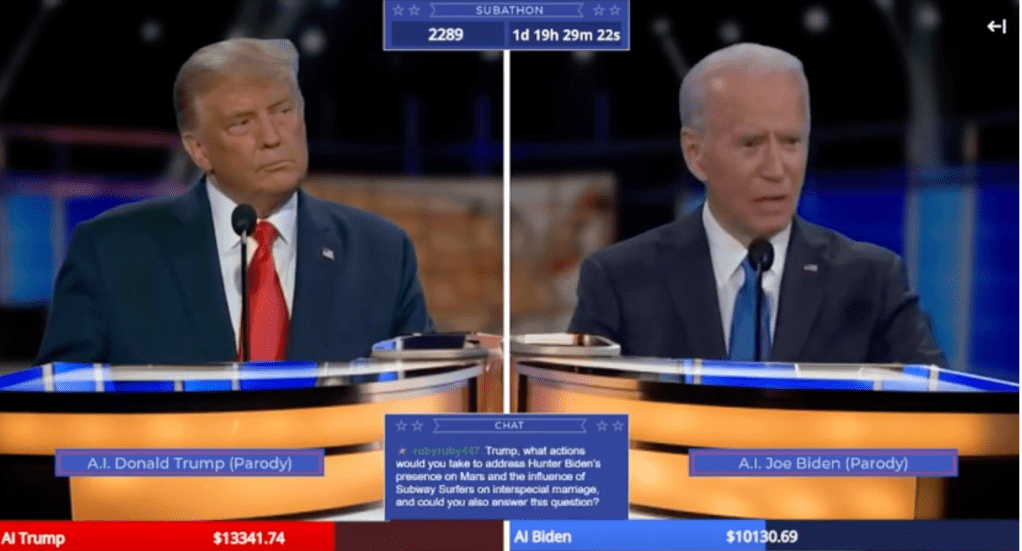

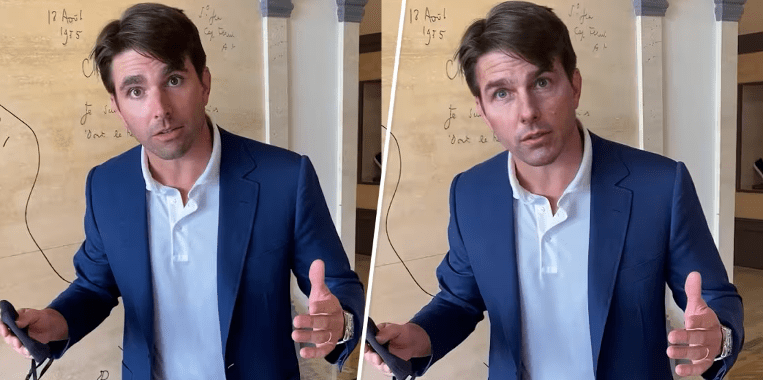

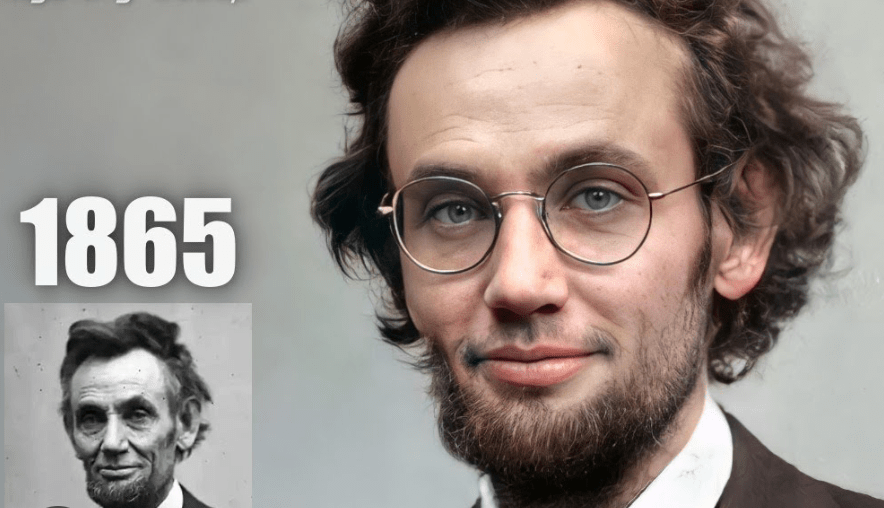

AI-generated deep fakes are manipulated digital media, often videos or audio recordings, that convincingly depict individuals saying or doing things they never actually said or did. You may have seen these in the news or on YouTube.

A fictional, yet convincing debate, between President Biden and Trump.

A Tom Cruise video where his face is superimposed onto the body of an actor in a scene from a movie to TV show that he wasn’t actually in.

A recreation of historical figures. Making it seem as if they are speaking about contemporary issues. This can blur the lines between the past and present, potentially distorting historical context and making Honest Abe Lincoln not so honest.

So far, these deep fakes have been mainly used for entertainment and parody, devised for comedic purposes placing well-known individuals in absurd or fictional situations. But it doesn’t take a big leap in logic to see how worrying these deep fakes can be. Deep fakes have been used to create news reports or interviews that appear authentic but are entirely fabricated. This can lead to the spread of false information and contribute to misinformation campaigns. There has been much debate about their involvement in the 2016 and 2020 American elections. AI-powered voice cloning can replicate a person’s voice with remarkable accuracy. Deep fake audio clips have been created to mimic the voices of individuals for deceptive purposes, such as fraud or spreading misinformation. AI will continue to evolve and develop at rapid speeds, and we, as an educated society, have to understand the pitfalls and uses of this uncharted new technology.

Starting with Why?

Over the past year, the term AI has been ubiquitous. Every major publication has covered it extensively, and thousands of new content creators have sprung up, promising to fill you in on ‘The Most Powerful AI tool on the Market!’ You can’t go onto social media without being bombarded with new AI products and side hustles. With all this publicity comes new and often confusing terminology. Generative AI, predictive text, deep learning, image recognition, emergence, large language models, the list goes on and on.

The goal here is to get you up to speed with the basics on how these programs work, what each program does, and how they could evolve in the near future.

Let’s start with ChatGPT, the most popular AI application as of this writing. ChatGPT uses a generative AI model to operate. Generative AI works by using a large dataset to learn patterns and relationships in the data, and then generates new content based on that learned information. As we go through each step in the generative process, we will add easy to understand examples to help illustrate the idea being presented. Our example will concern our protagonist from the prologue, Maya. She is preparing a lesson on volcanoes and is using ChatGPT to learn more about them. She is also using it to get lesson, activity, and major assignment ideas.

Training Data: Generative AI models are trained on massive amounts of data, which can include text, images, or other types of information. For text-based models like GPT-3, this training data consists of a wide variety of text from books, articles, websites, and more. GPT-3 was trained using a dataset called WebText2, a library of over 45 terabytes of text based data. Maya types ‘What is lava made of?’ ChatGPT provides her with a detailed response. This response is generated by scouring its mass amounts of data for the most popular answer.

Learning Patterns: During the training process, the AI model learns to recognize patterns, relationships, and structures present in the training data. It learns how words and phrases are used in different contexts, which allows it to understand grammar, syntax, and semantics. This is how ChatGPT can come up with answers so quickly to prompts made by the user. Maya is impressed at the speed and accuracy of the AI tool as it only takes about four seconds to start generating an answer to her question of ‘What is the largest active volcano in the world’.

Neural Networks: Generative AI models typically use neural networks, which are computational structures inspired by the human brain. These networks consist of layers of interconnected nodes (also called neurons) that process and transform data. Neural networks are paths created to create associations with various data sets. After a few hours of Maya researching volcanoes, she has developed neural networks that allow her brain to access various pieces of information on volcanoes and create links that make it easier for her to remember.

Fine-Tuning: After an initial phase of pre-training on the general dataset, the model can undergo fine-tuning on more specific tasks or domains. This helps the model adapt its general knowledge to more specialized tasks. After Maya has gone down the volcano rabbit-hole, she has a great foundation and can now dive deeper into specific topics of interest.

Generating Content: Once the model is trained, it can generate content by predicting the next word or sequence of words based on the input it receives. For example, if Maya gave a prompt like “What makes a volcano dormant is…” the model can generate a coherent and contextually relevant story continuation.

Contextual Understanding: One of the strengths of generative AI is its ability to maintain context over longer pieces of text. It can refer back to earlier parts of the text to ensure that the generated content remains coherent and relevant. Maya is impressed that as long as she remains in the current conversation thread, she can refer back to questions and answers from 60 minutes ago and the chat will be able to follow her line of questioning and build on its answers. She experiments with this when she asks “please create a lesson plan addressing the earlier facts about the geography and history of the volcano that you answered.” Generative AI works by training a neural network on large datasets to learn patterns in the data, which enables it to generate new content based on the learned knowledge. The success of the generated content depends on the quality of the training data, the architecture of the model, and the parameters used during the generation process.

LIMITATIONS TO GENERATIVE AI

There are some obvious and not-so-obvious limitations with generative AI models. As it generates content by predicting the logical next word in an answer based on the dataset it possesses, it can sometimes produce incorrect or nonsensical information if your prompt is phrased in a confusing or unusual way.

For example, in the early days of the AI tool, if Maya asked it “What’s heavier, a pound of bricks or two pounds of feathers?” the chatbot would answer “They both weigh the same, which is one pound. This is because a pound is a unit of measurement for weight and it is a constant value. So, whether you have a pound of bricks or two pounds of feathers they both weigh one pound in total.”

Obviously, the correct answer is that two pounds of feathers weighs more than a pound of bricks. Now why would a sophisticated AI tool make such a silly error? It’s because when going through its dataset, the most common and likely time that bricks and feathers are used is to challenge someone with the classic riddle, ‘what is heavier, ONE pound of bricks or ONE pound of feathers.’ In that case, the answer is that they are the same weight! By using predictive text based on its dataset, the chat will answer the riddle, not the actual question asked. While this is a playful example of the AI tool getting something wrong, inaccurate information could be a serious issue. This is why, when using ChatGPT and other generative AI, always verify and confirm answers that it provides.

I hope you enjoyed this first excerpt from Generative A.I. in Schools. How have you seen A.I. being used in schools in your context? Share your thoughts in a comment below.

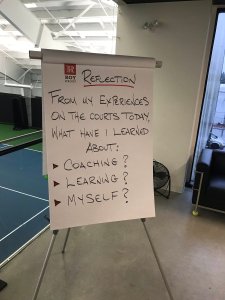

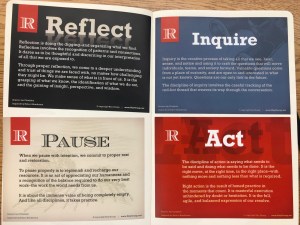

Through our second activity we started to explore what meaningful feedback looks like. We were partnered up to complete a task which involved tossing tennis balls from a seated position, and through multiple iterations discovered the relative value of encouragement versus detailed information as feedback. This is where we also began to examine coaching as a vehicle for feedback, mentorship and, ultimately, leadership.

Through our second activity we started to explore what meaningful feedback looks like. We were partnered up to complete a task which involved tossing tennis balls from a seated position, and through multiple iterations discovered the relative value of encouragement versus detailed information as feedback. This is where we also began to examine coaching as a vehicle for feedback, mentorship and, ultimately, leadership. In the afternoon this understanding was further refined as we hit the tennis court to start using a coaching model for providing feedback. Chis kicked off the session with an intro to tennis pro turned philosopher Tim Gallwey. Gallwey is the author of The Inner Game of Tennis, a psychological examination of sport performance phenomenon. In it, he describes two selves: Self 1, which is analytical, ego-driven and gets in the way of Self 2, which is more unconscious, intuitive and physical. When an athlete is ‘in the zone’, they are fully realizing their Self 2 potential. The secret to the Inner Game is really to find how to get Self 1 out of the way of Self 2.

In the afternoon this understanding was further refined as we hit the tennis court to start using a coaching model for providing feedback. Chis kicked off the session with an intro to tennis pro turned philosopher Tim Gallwey. Gallwey is the author of The Inner Game of Tennis, a psychological examination of sport performance phenomenon. In it, he describes two selves: Self 1, which is analytical, ego-driven and gets in the way of Self 2, which is more unconscious, intuitive and physical. When an athlete is ‘in the zone’, they are fully realizing their Self 2 potential. The secret to the Inner Game is really to find how to get Self 1 out of the way of Self 2. The ideas we had established earlier about quality feedback needing to be more informative than encouraging were also reinforced through this activity. We each had an opportunity to try all 3 roles, and from this activity I learned that as a coach/mentor it’s important to be highly attentive, to allow the student to define their own goals, and to remember that learning is a reflective process that works best when people feel safe.

The ideas we had established earlier about quality feedback needing to be more informative than encouraging were also reinforced through this activity. We each had an opportunity to try all 3 roles, and from this activity I learned that as a coach/mentor it’s important to be highly attentive, to allow the student to define their own goals, and to remember that learning is a reflective process that works best when people feel safe. And so for homework, we were challenged to carve out an authentic and meaningful pause; to take a break from the day, and to make a conscious effort to relax at some point between when we ended our Thursday and began our Friday. Unfortunately for the Handsworth participants this also happened to be our Parent-Teacher Interview evening. But needless to say, we did our best!

And so for homework, we were challenged to carve out an authentic and meaningful pause; to take a break from the day, and to make a conscious effort to relax at some point between when we ended our Thursday and began our Friday. Unfortunately for the Handsworth participants this also happened to be our Parent-Teacher Interview evening. But needless to say, we did our best!

Our culminating activity for the day was an outdoor competitive group challenge. We were divided into 4 teams, each with a coach to help guide using the Question Funnel, and supercoach to employ The Feedback Model with the coach. Our team challenge was a timed obstacle course, and brought together many of the concepts we had already learned, including having the coachees (the team) determine their own goals. We were encouraged to practice our questioning techniques, rather than telling people what to do, and were reminded that leaders are able to check their emotions by grasping themselves, grasping their team, and finally grasping the task at hand.

Our culminating activity for the day was an outdoor competitive group challenge. We were divided into 4 teams, each with a coach to help guide using the Question Funnel, and supercoach to employ The Feedback Model with the coach. Our team challenge was a timed obstacle course, and brought together many of the concepts we had already learned, including having the coachees (the team) determine their own goals. We were encouraged to practice our questioning techniques, rather than telling people what to do, and were reminded that leaders are able to check their emotions by grasping themselves, grasping their team, and finally grasping the task at hand.

This past month I read George Couros’ The Innovator’s Mindset. It was a book that came a good juncture in my professional growth as I move from teaching to administration. The book walked a nice line between both a teacher and administrator’s perspective on how to foster a culture of innovation in classrooms and a school as a whole. As with any book on education, I try to see if what I read simply reinforces my existing beliefs, or if it challenges me to see things differently. While much of what Couros writes was already in line with my beliefs, there was a lot of food for thought in the book and moments where I paused to reflect on my own experience and ways I could try to reframe some of the work we’re doing at Handsworth. I thought I might share a few notes and highlights from the book that really resonated with me.

This past month I read George Couros’ The Innovator’s Mindset. It was a book that came a good juncture in my professional growth as I move from teaching to administration. The book walked a nice line between both a teacher and administrator’s perspective on how to foster a culture of innovation in classrooms and a school as a whole. As with any book on education, I try to see if what I read simply reinforces my existing beliefs, or if it challenges me to see things differently. While much of what Couros writes was already in line with my beliefs, there was a lot of food for thought in the book and moments where I paused to reflect on my own experience and ways I could try to reframe some of the work we’re doing at Handsworth. I thought I might share a few notes and highlights from the book that really resonated with me. One of the quotes Couros cited early in the book was from American educator and author, Stephen Covey, who talks about the speed of trust. As someone who subscribes completely to the idea that any organization is only as strong as its people and the relationships between them I agree wholeheartedly with the notion that things just get done, and get done faster, where there is an established culture of trust. This culture is developed, as Couros says, “by the expectations, interactions, and, ultimately, the relationships of the entire learning community.” But, even more importantly, relationships are built first on a one-to-one basis. I like Couros’ suggestion that as an administrator it is important to work with smaller staff groups of 2 to 4 people to create an intimacy that is lacking with larger assembly style groups. I have been fortunate to work with some great district administrators in North Vancouver who have also worked hard to create relationships with other staff in those types of smaller working groups. Networking is so important, because, as is mentioned in the book, “alone we are smart, together we are brilliant.” Strong relationships create an environment for innovation.

One of the quotes Couros cited early in the book was from American educator and author, Stephen Covey, who talks about the speed of trust. As someone who subscribes completely to the idea that any organization is only as strong as its people and the relationships between them I agree wholeheartedly with the notion that things just get done, and get done faster, where there is an established culture of trust. This culture is developed, as Couros says, “by the expectations, interactions, and, ultimately, the relationships of the entire learning community.” But, even more importantly, relationships are built first on a one-to-one basis. I like Couros’ suggestion that as an administrator it is important to work with smaller staff groups of 2 to 4 people to create an intimacy that is lacking with larger assembly style groups. I have been fortunate to work with some great district administrators in North Vancouver who have also worked hard to create relationships with other staff in those types of smaller working groups. Networking is so important, because, as is mentioned in the book, “alone we are smart, together we are brilliant.” Strong relationships create an environment for innovation.

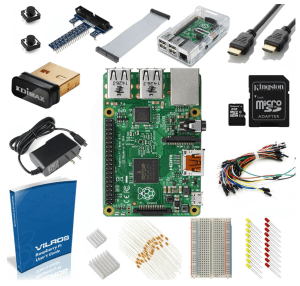

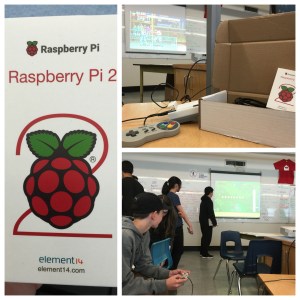

A friend of mine had taken a Raspberry Pi and turned it into a Super Nintendo simulator. I brought that into the class and plugged it in for the kids to see. It’s one thing to tell them what they can do, but being able to show them a finished product was even cooler. This inspired some students to research how to make one themselves. Because some projects that were larger may have needed students to buy other peripheral hardware like controllers, I provided an added incentive saying that if anyone came up with a substantial project that required some personal investment on their part, that they could take the Raspberry Pi home permanently when they were done their project.

A friend of mine had taken a Raspberry Pi and turned it into a Super Nintendo simulator. I brought that into the class and plugged it in for the kids to see. It’s one thing to tell them what they can do, but being able to show them a finished product was even cooler. This inspired some students to research how to make one themselves. Because some projects that were larger may have needed students to buy other peripheral hardware like controllers, I provided an added incentive saying that if anyone came up with a substantial project that required some personal investment on their part, that they could take the Raspberry Pi home permanently when they were done their project.

You must be logged in to post a comment.